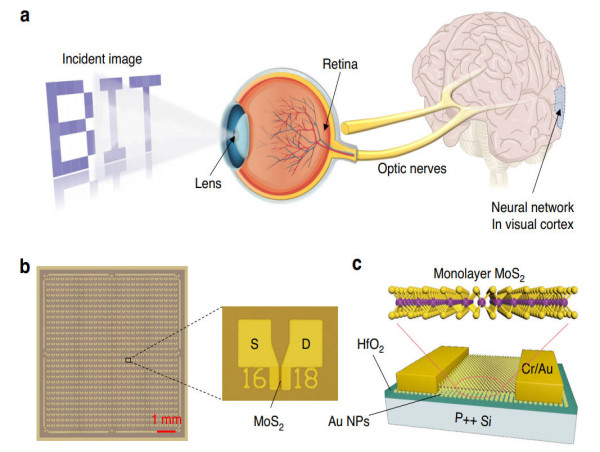

GA, UNITED STATES, March 5, 2025 /EINPresswire.com/ -- In a development for artificial intelligence, researchers have unveiled a 28×28 synaptic device array that promises to revolutionize artificial visual systems. This innovative array, measuring a compact 0.7×0.7 cm², integrates the capabilities of sensing, memory, and processing to mimic the intricate functions of the human visual system. Utilizing wafer-scale monolayer molybdenum disulfide (MoS2) and gold nanoparticles for enhanced electron capture, the array exhibits remarkable coordination between optical and electrical components. It is capable of both writing and erasing images and has achieved a stunning 96.5% accuracy in digit recognition, marking a significant leap forward in the development of large-scale neuromorphic systems.

The human visual system processes complex visual data efficiently through an interconnected network that allows for parallel processing. However, current artificial vision systems face numerous challenges, including circuit complexity, high power consumption, and difficulties in miniaturization. These issues arise from the separation between signal devices and processing units, hindering the ability to process visual information in parallel. Despite previous attempts, simulating a complete, biologically inspired vision system with a single device has remained elusive, driving the need for more integrated, efficient solutions capable of real-time processing.

On January 13, 2025, a study (DOI: 10.1038/s41378-024-00859-2) published in Microsystems & Nanoengineering introduced a game-changing solution to these longstanding challenges. Led by a team from the Beijing Institute of Technology, the study presents a 28×28 synaptic device array, fabricated using MoS2 floating-gate field-effect transistors. This device not only replicates the neural networks of the human visual system but also delivers exceptional optoelectronic synaptic performance, setting the stage for more efficient and integrated artificial visual systems.

The research team from Beijing Institute of Technology has successfully designed a 28×28 array where each device mimics the synaptic plasticity found in the human visual system. Using MoS2 floating-gate transistors combined with gold nanoparticles as electron capture layers, they achieved stable and uniform optoelectronic performance, capable of simulating key synaptic behaviors like excitatory postsynaptic current (EPSC) and paired-pulse facilitation (PPF). The array demonstrated an on/off ratio of around 10^6 and an average mobility of 8 cm²V^-1s^-1. Notably, the array was able to store and process image data, such as the emblem of Beijing Institute of Technology, showcasing its potential for optical data processing. Furthermore, the ability to adjust light intensity and fine-tune recognition accuracy provides a new method for optimizing the system's performance in varying lighting conditions.

Jing Zhao, the corresponding author of the study, emphasized the importance of these findings: “Our results offer a viable pathway toward large-scale integrated artificial visual neuromorphic systems. The performance of the MoS2-based synaptic array represents a major step toward practical applications, from device-level simulations to system-wide integration.”

The advances in artificial synaptic neural networks present numerous advantages, including high integration, stable uniformity, and powerful parallel processing capabilities. These attributes could transform the performance of computational systems. The network’s ability to simultaneously process optoelectronic signals and adjust synaptic weights via light signals has already demonstrated impressive results in handwritten digit recognition, with an accuracy of 96.5%. This breakthrough opens up exciting possibilities for the future of deep learning and artificial vision, potentially ushering in smarter, more efficient systems in the near future.

DOI

10.1038/s41378-024-00859-2

Original Source URL

https://doi.org/10.1038/s41378-024-00859-2

Funding information

This work is supported by National Natural Science Foundation of China (NSFC, Grand No. 62127810, 61804009), State Key Laboratory of Explosion Science and Safety Protection (QNKT24-03), Xiaomi Young Scholar, Beijing Institute of Technology Research Fund Program for Young Scholars and Analysis & Testing Center, Beijing Institute of Technology.

Lucy Wang

BioDesign Research

email us here

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.

![]()