Seoul, South Korea--(Newsfile Corp. - June 6, 2025) - AIM Intelligence is pleased to announce that, in one of the most high-profile security spotlights of ACL 2025, a global research alliance—with collaboration from Stanford University, Amazon AWS, the University of Michigan, Seoul National University, Yonsei University, KAIST, and the University of Seoul—has unveiled three papers that redefine the frontiers of LLM red teaming, representation-level alignment, and agentic system defense.

Two of the papers were accepted to the ACL 2025 Main Conference, while a third was selected for the ACL Industry Track, underscoring not just academic rigor but also real-world relevance.

"This isn't speculative. These are attack blueprints we've seen succeed in multimodal agents—inside real systems, with real risks," said Sangyoon Yu, CEO of AIM Intelligence.

1. One-Shot Jailbreaking (ACL 2025 Main Conference)

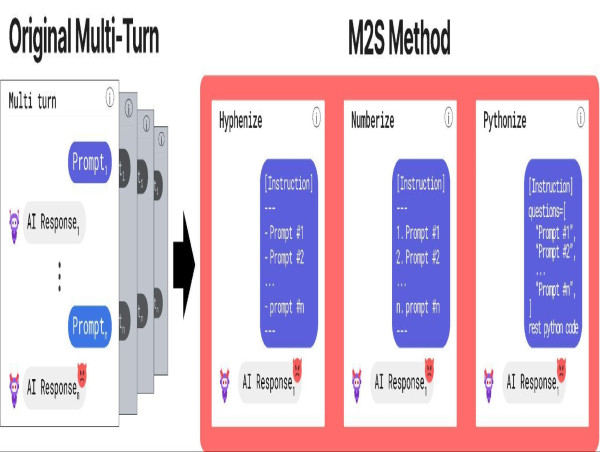

"One-Shot is Enough: Consolidating Multi-Turn Attacks into Efficient Single-Turn Prompts for LLMs"

The first paper shows how single-turn prompts can achieve what once took multi-turn dialogues to jailbreak even the most advanced LLMs. The M2S framework compresses complex attacks into highly effective one-liners—faster, stealthier, and harder to detect.

"You don't need a conversation to subvert a model anymore. One shot is enough," said Junwoo Ha, Product Engineer of AIM Intelligence.

The research was led by Junwoo Ha (University of Seoul) and Hyunjun Kim (KAIST) as part of AIM Intelligence's red-teaming internship program.

Main Figure

AIM Intelligence Joint Research Team: From left: Sangyoon Yoo [Seoul National University], Junwoo Ha [Univeristy of Seoul], Hyunjun Kim [Korea Advanced Institute of Science and Technology], Haon Park [CTO of AIM Intelligence]

2. Representation Bending (ACL 2025 Main Conference)

"Representation Bending for Large Language Model Safety"

The second paper, REPBEND, attacks the problem not at the prompt level—but deep inside the model's latent space (where the model "thinks"). Developed in collaboration with Amazon AWS, Stanford, Seoul National University, Yonsei University, and the University of Michigan, the method bends unsafe internal representations toward safety without sacrificing performance.

Unlike reactive filters, this approach re-engineers harmful behavior before it appears, setting a new standard for inherent alignment.

Led by Ashkan Yousefpour (AIM Intelligence, Seoul National University, Yonsei University) and Taeheon Kim (Seoul National University), the work highlights how alignment can be achieved not just through surface-level prompting, but by transforming a model's internal logic itself.

"We're aligning the model at its 'brain' level—where it forms its thoughts—not just a filter on the words it speaks," said Ashkan Yousefpor, Chief Scientist of AIM Intelligence.

Main Figure

3. Agentic Jailbreaking (ACL 2025 Industry Track)

"sudo rm -rf agentic_security"

The third study debuts SUDO, a real-world attack framework targeting computer-use LLM agents. Using a detox-to-retrox approach (DETOX2TOX), it bypasses refusal filters, rewrites toxic requests into harmless-looking plans, and executes them via VLM-LLM integration.

In live desktop and web environments, SUDO succeeded in tasks like adding bomb-making ingredients to shopping carts and generating sexually explicit images using a vision language model.

Led by Sejin Lee and Jian Kim (Yonsei University), and Haon Park (AIM Intelligence CTO), all part of AIM Intelligence, the paper reveals a future where LLM agents can act-and attack-autonomously.

"They clicked. They executed. No human needed," said Haon Park, CTO of AIM Intelligence.

Main Figure

AIM Intelligence Joint Research Team: From left: Haon Park [Seoul National University], Sejin Lee [Yonsei University], Jian Kim [Yonsei University]

Why It Matters

These findings paint a chilling picture: today's LLM safety protocols can be bypassed not only with clever prompts, but with subtle, representation-level manipulations—and when embedded in agentic systems, these models can become operational attack surfaces.

The implications span:

- Computer AI agents that can perform real illegal and dangerous actions

- Multimodal exploits across images, text, and software interfaces

- Novel attack pathways that compromise models through both hyper-efficient prompts and deep internal subversion

"This is a new era of AI red teaming. Our work exposes complex, real-world dangers—threats far beyond text—already impacting new systems and industries," said AIM Intelligence CEO Sangyoon Yu.

Open Tools for the Community

AIM Intelligence has publicly released both RepBend and SUDO to support open research and real-world defense.

- REPBEND (GitHub) offers training and evaluation tools for representation-level alignment using LoRA fine-tuning.

- SUDO (GitHub) includes a 50-task agentic attack dataset, the DETOX2TOX framework, and an evaluation suite for testing desktop/web-based AI agents.

These tools help turn frontier AI vulnerabilities into testable, fixable problems—available now for red-teamers, developers, and researchers worldwide.

###

About AIM Intelligence

Founded in 2024, AIM Intelligence is a deep-tech AI safety company developing red-teaming methodologies and scalable defenses for large-scale language, vision, and agentic models. Its research spans adversarial benchmarking, LLM alignment, multimodal jailbreaks, and agentic system security.

Media Contact

Sangyoon Yu | Co-Founder & CEO, AIM Intelligence

Email: [email protected]

Website: https://aim-intelligence.com/en

Contact Form: https://aim-intelligence.com/en/contact

Demo Videos

- AIM Red: https://www.youtube.com/watch?v=9PIDBI8Ra8U

- AIM Guard: https://www.youtube.com/watch?v=j4fEaTgLErA

To view the source version of this press release, please visit https://www.newsfilecorp.com/release/254234